The field of generative AI is rapidly advancing and accelerating at a mind-boggling pace. I've been enjoying Ben's excellent daily newsletter to try to stay on top of the wave and understand what's unfolding.

From natural language processing to image and video generation, the capabilities of these systems are becoming increasingly impressive (and sometimes alarming..but that's a topic for another post). However, despite technological advancements, there is one particularly fascinating bottleneck holding back the full potential of generative AI today: humans.

Generative AI relies on excellent human instructions and intent

I know what you're thinking. No, I'm not talking about the AI robot overlords taking over our planet and eliminating us in the process. Rather, in order for a generative AI system to produce truly impressive output, it needs clear and detailed instructions and intent from the humans who are prompting it. By default, unfortunately, we suck at both.

Most people, don't realize this until they give instructions to an AI for the first time. You see, generative AI isn't very...intelligent. You might think that you're good at giving instructions because your co-workers listen to you and do what you wanted them to do. But in the time your co-workers turned from tiny little bébés to the productive members of society they are now, they listened not only experienced the world around them, but listened to hundreds of thousands of instructions from their parents, teachers and others. Through an impressive amount of trial and error, they developed a beautiful intuition for filling in the gaps and inferring the context of your question. AI doesn't do that...yet. AI behaves like the dad in this video:

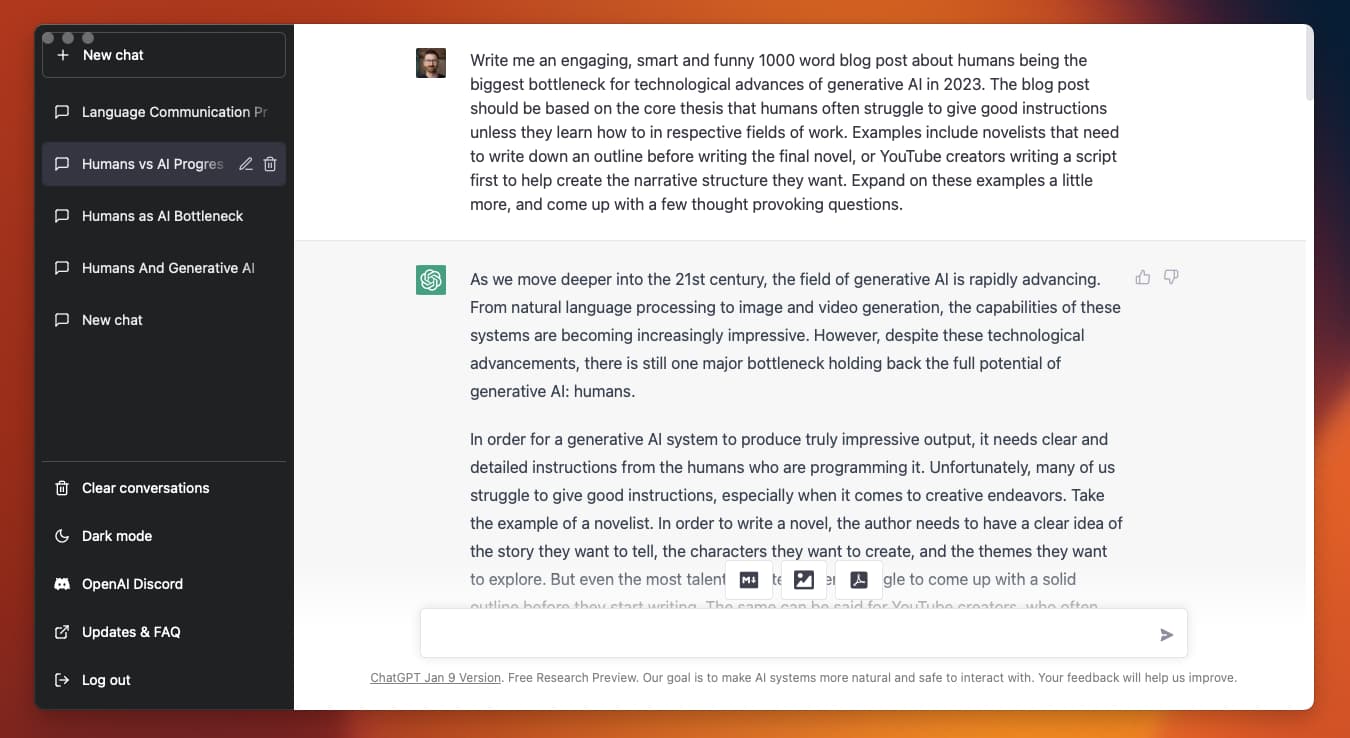

In the last few weeks, I've been blowing many of my friends' minds by introducing them to the wonders of ChatGPT, Notion AI and Stable Diffusion, only to have them excitedly download DiffusionBee on their Mac, type in "picture of a dog" and get disappointed by this:

Sure, it resembles a dog. But it's not a great picture. Worse, it's not the picture they didn't know they didn't want. In their mind, they expected a beautiful close-up shot that a professional photographer took during golden hour with a 35mm lens with 1.8 f-stop to produce that nice bokeh. But they didn't ask for those things. Worse, they might not even know the words for the things they want to see (f-stop? bokeh? 35mm? All gibberish until you've used a camera before). Coming up with great instructions, or what the AI world calls prompts, is surprisingly difficult, so much so that Prompt Engineering is an actual new job category with companies like Anthropic paying $250k-$335k for a decent one.

But even great instructions don't matter if there's no intent

Unfortunately, mastering the skill of giving extremely detailed instructions is only step one of two to make AI work for you. The other is intent.

This isn't just true with AI – take the example of a writer. Being able to write great prose doesn't make you a great novelist, the same way that being able to shoot and edit video doesn't make you great YouTube creator. In order to write a novel, the author needs to have a clear idea of the story they want to tell, the characters they want to create, and the themes they want to explore. And even the most talented writers often struggle to come up with a solid outline before they start writing. The same can be said for many YouTube creators, who often spend hours writing scripts and crafting narrative structures before filming a single frame. Generative AI is perhaps easiest to compare then to the art of photography: Everyone can take a photo, but few can become photo artists. What you capture, how you capture it, and why you capture it matters. This stuff is hard. Coming up with great ideas is hard. We don't know what we want until we put in the work.

On a side note, this is also why I think Neuralink (while fascinating!) is not going to give Elon what he dreams of: Highly effective human-to-human brain communication. Elon thinks that the 'compression' that happens by your brain when thought is translated into speech is making comprehension by the other person less efficient. I don't think it's that simple. In fact, I think the opposite is often true: By encoding your thoughts into language, you bring order to the chaos of the raw thought and shape it into a form that is comprehensible by people other than you.

When everything goes, nothing will

Also worth noting is that the most difficult form of ideation is free form ideation – the act of coming up with something from nothing. Humans greatly benefit from existing frameworks, structures or narratives they can built upon, like writing the 3rd novel of a series, or creating a mashup of a song. But sitting in front of an empty screen? Terrifying.

In the case of ChatGPT or Stable Diffusion, many humans simply freeze in front of the intimidating single text box that has a seemingly limitless all-knowing genie-like assistant waiting for your input.

Solutions

So, what can be done to overcome these human bottlenecks? For one, the emerging AI community could invest in training programs that teach people how to effectively communicate their ideas to AI. Like workshops on AI-assisted outlining and scriptwriting, as well as courses on the technical aspects of generative AI (to understand how the AI is interpreting your prompts).

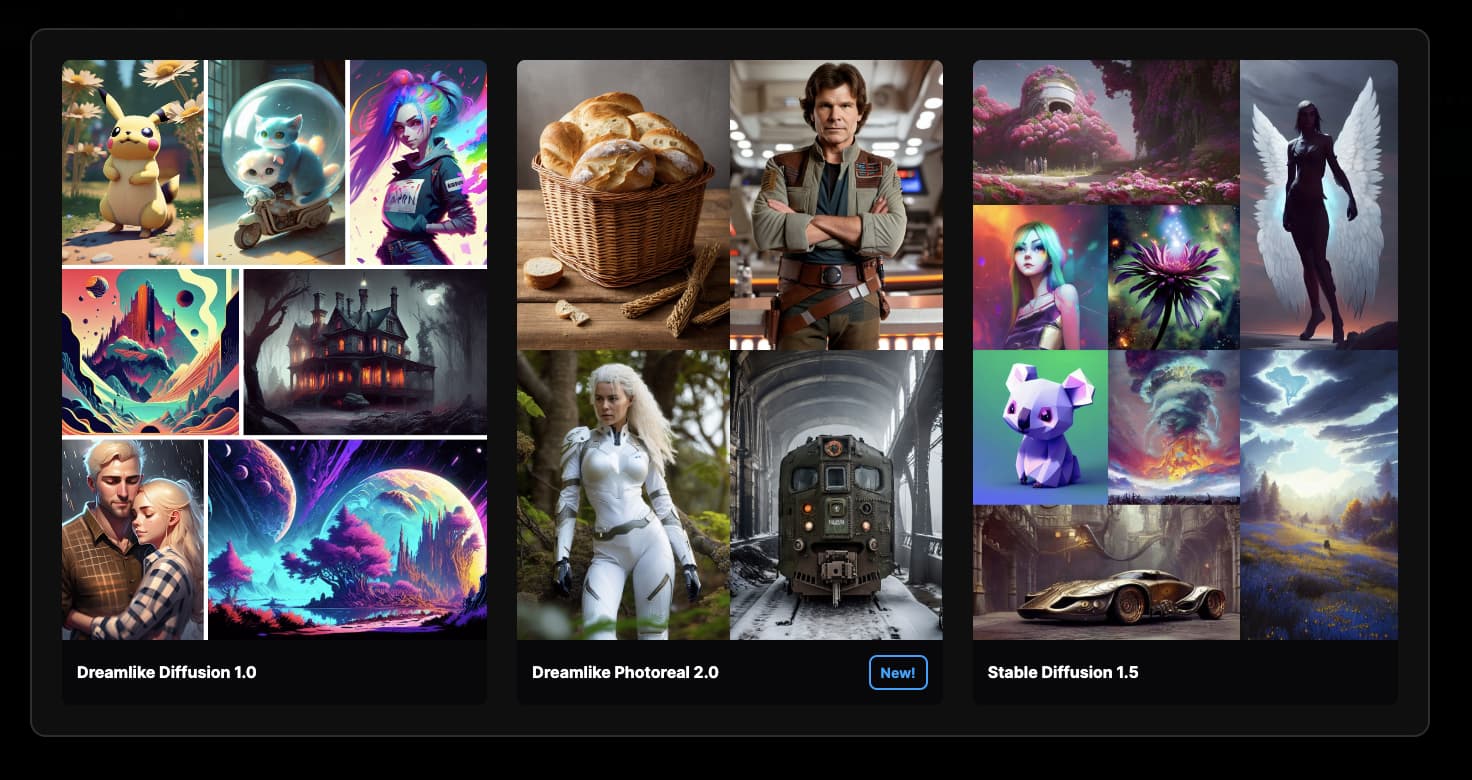

On the other hand, we need to improve AI systems' abilities to understand and interpret the instructions given to them by humans. Reflecting from my many years of experience building tools and workflows, the AI industry needs to come up with ever better interfaces that take a more structured approach to prompting and offer templates on top of the models to guide and inspire use. Luckily, many are already starting to produce accessibility layers on top of generative AI, like:

- Awesome ChatGPT prompts gives you useful starting points for ChatGPT.

- Stable Diffusion frontends like Dreamlike.art or Lexica feature fine-tuned models deviating from the original Stable Diffusion that are much better at creating certain styles (many are also auto-tuning and expanding prompts under the hood).

- Frontends like the DiffusionBee app come with lots of 'prompt parts' that can be added to give inspiration.

Ultimately, the key to unlocking the full potential of generative AI is for humans to learn how to effectively communicate their ideas to these systems, and for AI systems to be designed in a way that makes them more accessible to humans.

I'm deep down the AI rabbit hole, so expect to hear more from me on the topic in the next months - the good, bad and the ugly. Onwards!