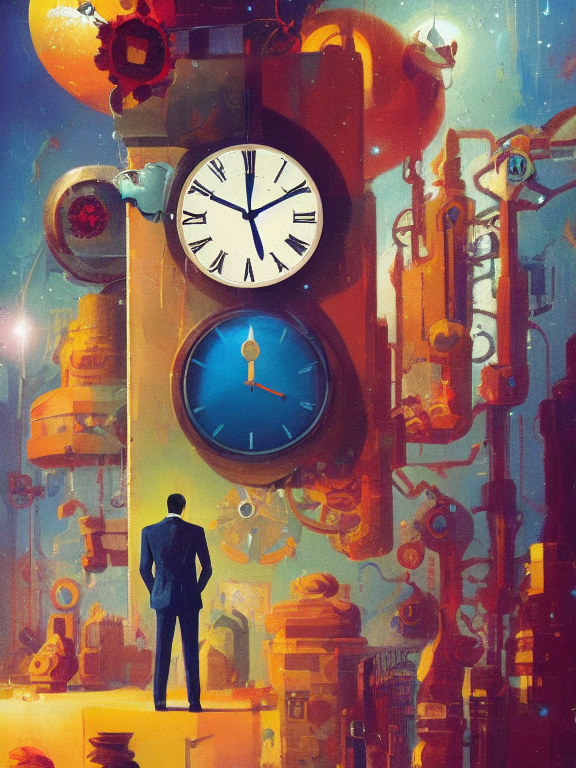

We're closer than you might think to getting the first video implementations in the exploding field of generative AI. Runway, an AI-assisted browser-based video editing suite, has been at the forefront of generative video for a while now, and they've just launched their biggest milestone yet: Gen-1, or what they call "The Next Step Forward for Generative AI":

Type 1: video2video

However, while Gen-1 is a major upgrade to existing solutions (like EB Synth combined with Disco Diffusion), it doesn't generate entirely new video from a text prompt. Instead, they focus on video2video–a process that involves an original video of yours as input plus a certain modifier (like a style prompt) to generate new variations. Runway focuses on:

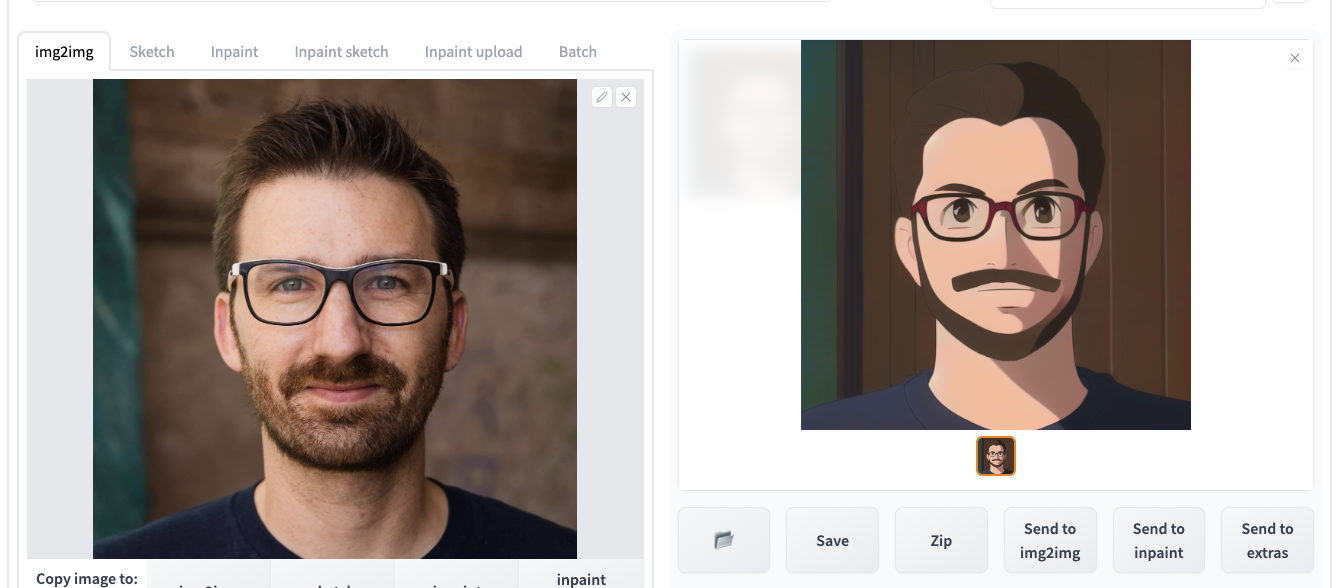

- Stylization (Apply a new style to an existing video, e.g. realistic to animation)

- Storyboard (turn mockups into fully stylized renders)

- Mask Mode (isolate an area of a video and modify just that piece - essentially a variation of the Stylization mode)

- Render Mode (turn untextured 3d renders into realistic outputs)

- Customization Mode (A way to customize the model for even higher fidelity)

Ultimately, they're all variations of the same principle: Take a video, break it down into still images, and modify each image with a diffusion model ("img2img"), then later stitch the frames together again into a final video.

If you're thinking "wait, I can do this myself" then yes, you're somewhat right! However, the big problem with generative image generation is consistency. It's very hard to get similar, consistent looks across two images you feed to a model. As a result, your final video will look extremely janky and confusing.

Gen-1's innovation is that they found novel ways to increase the temporal consistency, in other words how much each frame matches the look and feel of the previous (read the paper here if you're curious - there's some cool info about their process involving depth maps, motion vectors and more). But make no mistake: While Gen-1 is a major milestone, we're still quite a bit off from 'good enough' temporal consistency that can fool the viewers' eye into believing that they're looking at one continuous scene. What you get today is a somewhat accelerated stop motion look.

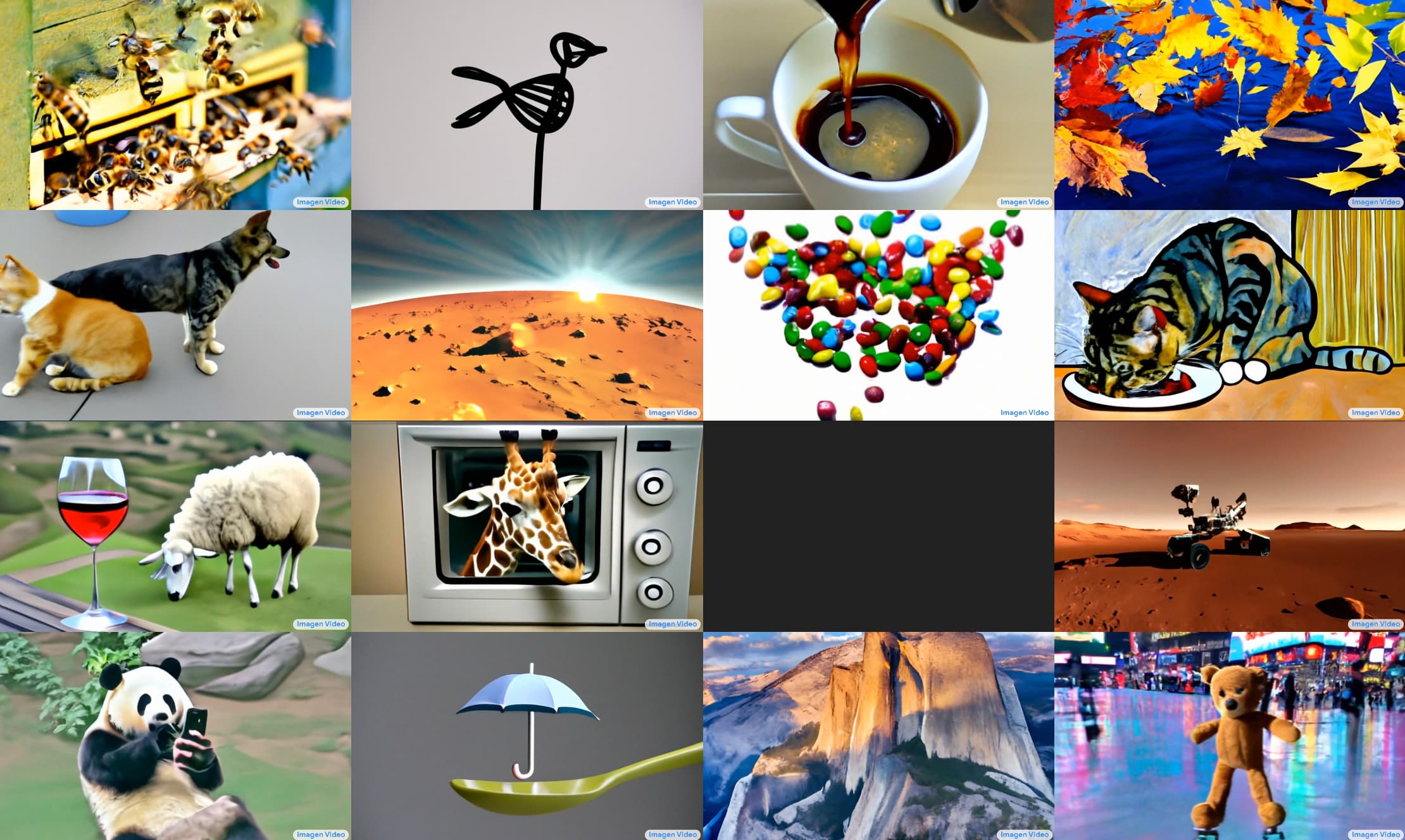

Type 2: text2video

Of course, what most people think when they hear "video made with AI" is "I talk to ChatGPT, ask it for a video of a cute dog, and get a video". That's called text2video or prompt2video, and while there are no known startups I know of that allow you to do this on your own yet, Meta and Google have shown that it's possible, with their Make-A-Video and Imagen research projects, respectively.

In very simplified terms, the big problem with text2video is that temporal consistency becomes an even bigger problem than it already is. Whereas with video2video, you at least had access to consistent frames in the original video, now you got none! Each frame in text2video has to be generated from your prompt, e.g. "video of a dog", without a stable point of visual reference to rely on.

Type 3: Composition

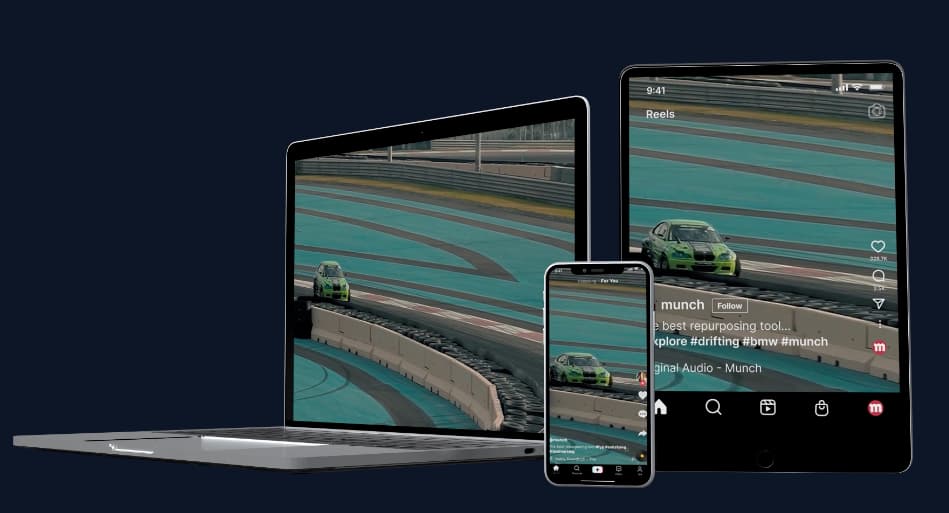

Because video2video and text2video are hard*, the most common type of startups you'll see emerge for now are the ones focused on composition: Taking existing footage, music and other assets, and composing them in novel ways using AI.

* in ways different than composition-type startups - both have their own set of complexities!

I've previously written about products that convert and auto-crop horizontal video to vertical video using AI, but there are now startups that take this to the next level, like Munch: Munch doesn't just convert an existing clip, it extracts the most engaging moments from your videos and creates smart auto-cropped clips to share on social media.

Beware the hype

So why do I think it's useful to know the differences, at least at a high level? Because everyone is frantically trying to grab your attention, and certain startups are somewhat misleading in their advertising.

Take InVideo AI for example, a startup that claims that their product "can turn any content or idea into video, instantly":

Judging from their video and landing page, you might think it's a Type B startup creating completely new footage from scratch, but that's not the case. After seeing the footage and thinking "yeah there's no way they leapfrogged Runway et al in such a dramatic fashion" I found out it's actually Type C, which they state only in the YouTube video description:

All you have to do is describe your idea in the form of a 'prompt' and InVideo AI will decode it into a script, collect footage from our stock libraries, auto-line it up to the script, make it look great, and GENERATE your video before you can say “WHOA, That's revolutionary".

I'm still excited to try their product, and it could provide a ton of value to folks – but I yearn back to the days of straight forward product marketing that shows the product in in action so we're all on the same page.

Have you tried any of the generative video tools yet? Let me know!