Originally published on 2014/05/21 - Updated and overhauled on 2022/12/13.

Introduction

You may have heard the term frames per second (fps), and if you do, you probably saw it on many labels, internet discussions and TV ads. If you're like me, somewhere in the back of your mind it has always bothered you not understanding why we use different frame rates for different things, and why seemingly contradictory, movie buffs swear on 24 fps, while hardcore gamers scoff at games that run below 60. If that's you, then today's your lucky day, as the following article is a deep exploration of the what, why and how of frame rate. It's gonna get pretty crazy. You might be questioning reality when we're done.

Still here? Great, let's dive in.

Frame rate in the early days of filmmaking

When the first filmmakers started to record motion pictures, many early discoveries on how to record and display film were made by trial and error. The first cameras and projectors were hand controlled, and in the early days analog film was very expensive – so expensive that when directors recorded motion on camera, they used the lowest acceptable frame rate for portrayed motion in order to conserve film. That threshold usually hovered at around 16 fps to 24 fps.

When sound was added to the physical film (as an audio track next to it) and played back along with the video at the same pace, hand-controlled playback suddenly became a problem. Turns out that humans can deal with a variable frame rate, but not with a variable sound rate (where both tempo and pitch change), so filmmakers had to settle on a steady rate for both. This rate ended up being 24 fps and remains the standard for motion pictures today. In television, the frame rate had to be slightly modified due to the way CRT TVs sync with AC power frequency.

The human eye vs. frames

But if 24 fps is barely acceptable for motion pictures, then what is the optimal frame rate? That's a trick question, as there isn't any.

Motion perception is the process of inferring the speed and direction of elements in a scene based on visual, vestibular and proprioceptive inputs. Although this process appears straightforward to most observers, it has proven to be a difficult problem from a computational perspective, and extraordinarily difficult to explain in terms of neural processing. - Wikipedia

The eye is not a camera. It does not see motion as a series of frames. Instead, it perceives a continuous stream of information rather than a set of discrete images. Why, then, do frames work at all?

Two important phenomena explain why we see motion when looking at quickly rotated images: persistence of vision and the phi phenomenon.

The persistence of vision and the phi phenomenon

Most filmmakers think persistence of vision is the sole reason for why we can see motion pictures, but that's not all to it; although observed but not scientifically proven, persistence of vision is the phenomenon by which an afterimage seemingly persists for approximately 40 milliseconds (ms) on the retina of the eye. This explains why we don’t see black flicker in movie theaters or (usually) on old CRT screens.

The phi phenomenon, on the other hand, is the what many consider the true reason we perceive motion when being shown individual images: It’s the optical illusion of perceiving continuous motion between separate objects viewed rapidly in succession. Even the phi phenomenon is being debated though, and the science is inconclusive.

Anyway, what we know for sure is that our brain is very good at helping us fake motion from stills – not perfect, but good enough. Using a series of still frames to simulate motion creates perceptual artifacts, depending largely on the frame rate. Yes, that means no frame rate will ever be optimal, but we can get pretty close.

Common frame rates, from poor to perfect

To get a better idea of the absolute scale of frame rate quality, here’s an overview chart. Keep in mind that because the eye is complex and doesn’t see individual frames, none of this is hard science, merely observations by various people over time.

| Framerate | Human perception |

|---|---|

| 10-12 fps | Absolute minimum for motion portrayal. Anything below is recognized as individual images. |

| < 16 fps | Causes visible stutter, headaches for many. |

| 24 fps | Minimum tolerable to perceive motion, cost efficient. |

| 30 fps | Much better than 24 fps, but not lifelike. The standard for NTSC video, due to the AC signal frequency. |

| 48 fps | Good, but not good enough to feel entirely real (even though Thomas Edison thought so). Also see this article. |

| 60 fps | The sweet spot; most people won’t perceive much smoother images above 60 fps. |

| ∞ fps | To date, science hasn’t proven or observed our theoretical limit. |

Demo time: How does 24 fps compare to 60 fps?

Yes, it really is quite stark of a difference, isn't it. But what's all this talk from movie aficionados about 24fps being the wholy grail? To find out why there's so much resistance against high frame rates in the world of film, let's take a look at The Hobbit.

HFR: Rewiring your brain with the help of a Hobbit

“The Hobbit” was the first popular motion picture shot at twice the standard frame rate, 48 fps, then coined HFR (short for high frame rate). Unfortunately not everyone was happy about the new look. There were multiple reasons for this, but by far the most common one was the so-called soap opera effect. It also didn't help that the tech was still so new that (according to my hazy memory of speaking to a few folks in the know) the RED cameras used to film would take in significantly less light when shooting at 48fps vs 24fps (due to the higher shutter speed required), requiring the crew to overcompensate with overly bright lighting for indoor scenes, with many viewers reporting that it made elaborate sets and outfits look like theme park attractions and costumes.

Most people’s brains have been conditioned to associate 24 fps with expensive Hollywood productions (see film look), while 50-60 half frames (interlaced TV signals) reminds us of 'cheap' TV productions. This in part explains the intense hate by many for the motion interpolation feature on modern TVs (even though modern versions of it are usually pretty good at rendering smooth motion without artifacts, a common reason naysayers dismiss the feature).

Even though higher frame rates are measurably better (by making motion less jerky and fighting motion blur), there’s no easy answer on how to make them feel better. It requires a reconditioning of our brains, and while some viewers enjoyed “The Hobbit” in HFR, others have sworn off high frame rates entirely.

Cameras vs. CGI: The story of motion blur

But if 24 fps is supposedly barely tolerable, why have you never walked out of a cinema, complaining that the picture was too choppy? It turns out that video cameras have a natural feature – or bug, depending on your definition – that most computer generated graphics are missing unless specifically compensated for: motion blur.

Once you see it, the lack of motion blur in many video games and software is painfully obvious. Dmitri Shuralyov has created a nifty WebGL demo that simulates motion blur. Move your mouse around quickly to see the effect.

Motion blur, as defined at Wikipedia, is

...the apparent streaking of rapidly moving objects in a still image or a sequence of images such as a movie or animation. It results when the image being recorded changes during the recording of a single frame, either due to rapid movement or long exposure.

A picture says it better:

In very simple terms, Motion blur “cheats” by portraying a lot of motion in a single frame at the expense of detail. This is a primary reason for why a movie looks relatively acceptable at 24 fps compared to a video game.

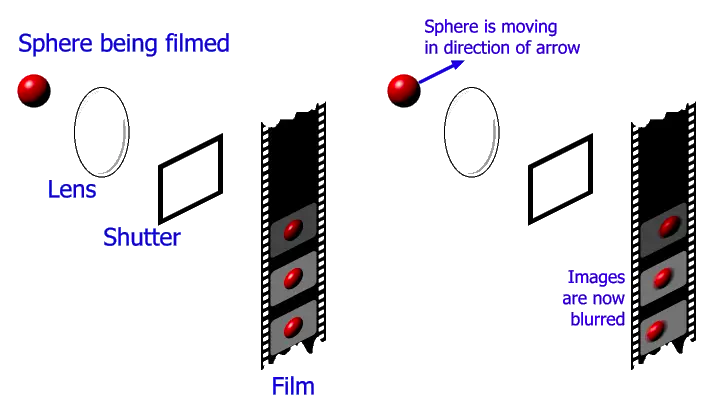

But how does motion blur get captured? In the words of E&S, pioneers at using 60 fps for their mega dome screens:

When you shoot film at 24 fps, the camera only sees and records a portion of the motion in front of the lens, and the shutter closes between each exposure, allowing the camera to reposition the film for the next frame. This means that the shutter is closed between frames as long as it is open. With fast motion and action in front of the camera, the frame rate is actually too slow to keep up, so the imagery ends up being blurred in each frame (because of the exposure time).

Here’s a simplified graphic explaining the process.

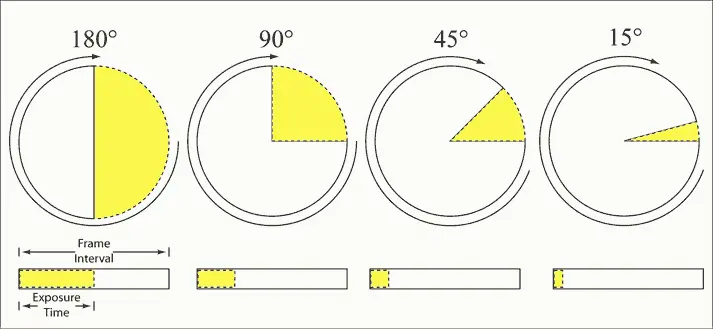

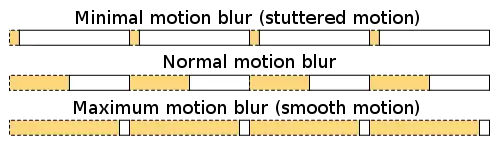

Classical movie cameras use a so-called rotary disc shutter to do the job of capturing motion blur. By rotating the disc, you open the shutter for a controlled amount of time at a certain angle and, depending on that angle, you change the exposure time on the film. If the exposure time is short, less motion is captured on the frame, resulting in less motion blur; if the exposure time is long, more motion is captured on the frame, resulting in more motion blur.

If motion blur is such a good thing, why would a movie maker want to get rid of it? Well, by adding motion blur, you lose detail; by getting rid of it, you lose smoothness. So when directors want to shoot scenes that require a lot of detail, such as explosions with tiny particles flying through the air or complicated action scenes, they often choose a tight shutter that reduces blur and creates a crisp, stop motion-like look.

But a lot of moving pictures are created via software today, not camera. If those would benefit from motion blur too, why don't we just add it like in the demo above?

Sadly, even though motion blur would make lower frame rates in games and on web sites much more acceptable, adding it is often simply too expensive. To recreate perfect motion blur, you would need to capture four times the number of frames of an object in motion, and then do temporal filtering or anti-aliasing (there is a great explanation by Hugo Elias here). If making a 24 fps source more acceptable requires you to render at 96 fps, you might as well just bump up the frame rate in the first place, so it’s often not an option. Exceptions are video games that know the trajectory of moving objects in advance and can approximate motion blur, as well as declarative animation systems, and of course CGI films like Pixar’s.

A new problem with every display

Over the years, humans created lots of different ways to display motion pictures on a screen or surface, and every display struggles in different ways when it comes to life like, accurate motion projection. But before dive in, we have to talk about refresh rates, and the difference between them, and frames.

Refresh rates (Hz) and Frames per second (fps)

If you’re part of a generation that still experienced laptops with disk drives and ever wondered why Blu-Ray playback was so poor on them, it's because the frame rate is not evenly divisible by the refresh rate. Yes, the refresh rate and frame rate are not the same thing. Per Wikipedia,

[..] the refresh rate includes the repeated drawing of identical frames, while frame rate measures how often a video source can feed an entire frame of new data to a display.

In other words, the frame rate stands for the number of individual frames shown on screen, while the refresh rate describes the number of times the image on screen is refreshed or updated.

In the best case, refresh rate and and frame rate are in perfect sync, but there are good reasons to use three times the refresh rate of the frame rate in certain scenarios, depending on the projection system being used (for more on the topic, check out What is Monitor Refresh Rate? – Definitive Guide). Got it? Cool. This will become important in a bit.

Movie projectors

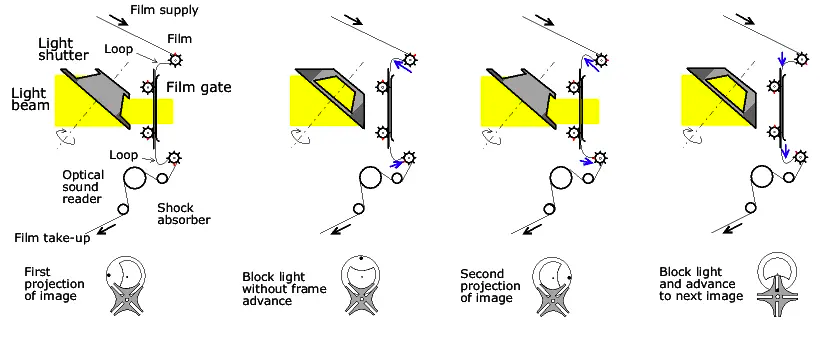

Many people think that movie projectors work by rolling a film past a light source. But if that were the case, we would only see a continuous blurry image. Instead, as with capturing the film in the first place, a shutter is used to project separate frames. After a frame is shown, the shutter is closed and no light is let through while the film moves, after which the shutter is opened to show the next frame, and the process repeats.

That isn’t the whole picture, though (get it? get it? picture? ok I'll see myself out). Sure, this process would show you a movie, but the flicker caused by the screen being black half the time would drive you crazy. This “black out” between the frames is what would destroy the illusion. To compensate for this issue, movie projectors actually close the shutter twice or even three times during a single frame.

Of course, this seems completely counter-intuitive – why would adding more flicker feel like less flicker? The answer is that it reduces the “black out” period, which has a disproportionate effect on the vision system.

The flicker fusion threshold (closely related to persistence of vision) describes the effect that these black out periods have. At roughly ~45Hz “black out” periods need to be less than ~60% of the frame time, which is why the double shutter method for movies works. At above 60Hz the “black out” period can be over 90% of the frame time (needed by displays like CRTs).The full concept is subtly more complicated, but as a rule of thumb, here’s how to prevent the perception of flicker:

- Use a different display type that doesn’t flicker due to no “black out” between frames – that is, always keep a frame on display

- Have constant, non-variable black phases that modulate at less than 16 ms

CRTs

CRT monitors and TVs work by shooting electrons onto a fluorescent screen containing low persistence phosphor. How low is the persistence? So low that you never actually see a full image! Instead, while the electron scan is running, the lit-up phosphor loses its intensity in less than 50 microseconds – that’s 0.05 milliseconds! By comparison, a full frame on your smartphone is shown for 16.67ms.

So the whole reason that CRTs work in the first place is persistence of vision. Because of the long black phase between light samples, CRTs are often perceived to flicker – especially in PAL, which operates at 50 Hz, versus NTSC, which operates at 60 Hz, right where the flicker fusion threshold kicks in.

To make matters even more complicated, the eye doesn’t perceive flicker equally in every corner. In fact, peripheral vision, though much blurrier than direct vision, is more sensitive to brightness and has a significantly faster response time. This was likely very useful in the caveman days for detecting wild animals leaping out from the side to eat you, but it causes plenty of headaches when watching movies on a CRT up close or from an odd angle.

LCDs

Liquid Crystal Displays (LCDs), categorized as a sample-and-hold type display, are pretty amazing because they don’t have any black phases in the first place. The current image just stays up until the display is given a new image.

Let me repeat that: There is no refresh-induced flicker with LCDs, no matter how low the refresh rate.

But now you’re thinking, “Wait – I’ve been shopping for TVs recently and every manufacturer promoted the hell out of a better refresh rate!” And while a large part of it is surely marketing, higher refresh rates with LCDs do solve a problem – just not the one you’re thinking of.

LCD manufacturers implement higher and higher refresh rates because of display or eye-induced motion blur. That’s right; not only can a camera record motion blur, but your eyes can as well! Before explaining how this happens, here are two mind blowing demos that help you experience the effect (click the image).

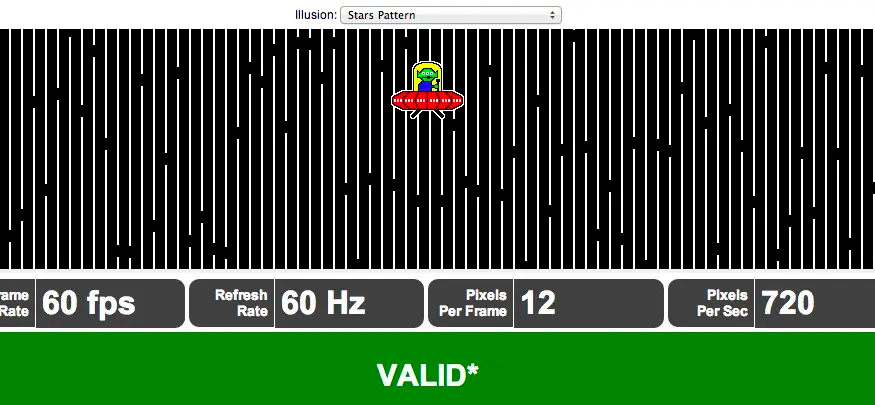

In this first experiment, focusing your vision onto the unmoving flying alien at the top allows you to clearly see the white lines, while focusing on the moving alien at the bottom magically makes the white lines disappear. In the words of the Blur Busters website,

“Your eye tracking causes the vertical lines in each refresh to be blurred into thicker lines, filling the black gaps. Short-persistence displays (such as CRT or LightBoost) eliminate this motion blur, so this motion test looks different on those displays.”

In fact, the effect of our eyes tracking certain objects can’t ever be fully prevented, and is often such a big problem with movies and film productions that there are people whose whole job is to predict what the eye will be tracking in a scene and to make sure that there is nothing else to disturb it.

In the second experiment, the folks at Blur Busters try to recreate the effect of an LCD display vs. short-persistence displays by simply inserting black frames between display frames and, amazingly, it works.

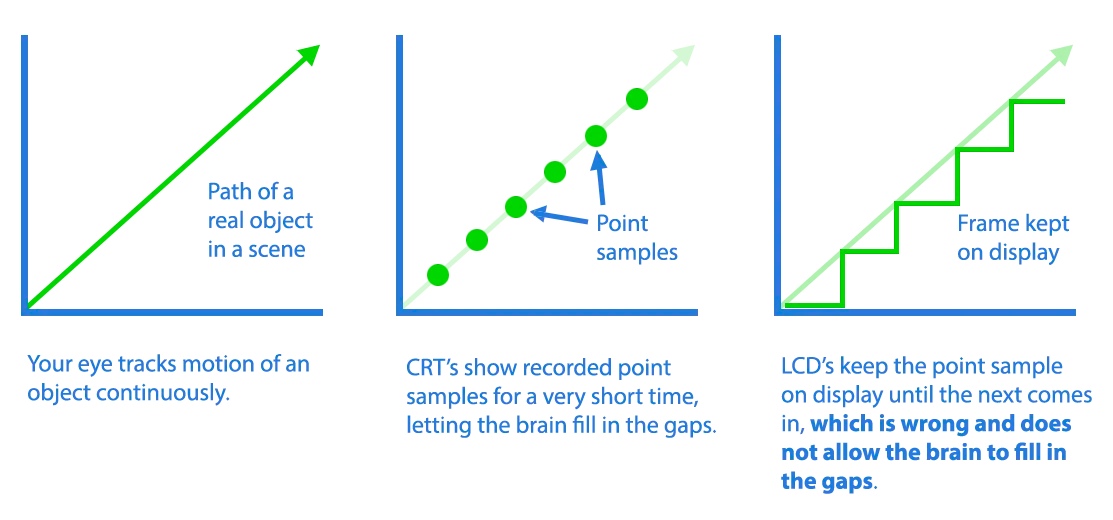

As illustrated earlier, motion blur can either be a blessing or a curse – it sacrifices sharpness for smoothness, and the blurring added by your eyes is never desirable. So why is motion blur such a big issue with LCDs compared to CRTs that do not have such issues? Here’s an explanation of what happens if a frame that has been captured in a short amount of time is held on screen longer than expected.

The following quote from Dave Marsh’s great MSDN article about temporal rate conversion is amazingly accurate and timely for a fifteen-year-old article:

When a pixel is addressed, it is loaded with a value and stays at that light output value until it is next addressed. From an image portrayal point of view, this is the wrong thing to do. The sample of the original scene is only valid for an instant in time. After that instant, the objects in the scene will have moved to different places. It is not valid to try to hold the images of the objects at a fixed position until the next sample comes along that portrays the object as having instantly jumped to a completely different place.

And, his conclusion:

Your eye tracking will be trying to smoothly follow the movement of the object of interest and the display will be holding it in a fixed position for the whole frame. The result will inevitably be a blurred image of the moving object.

Yikes! So what you want to do is flash a sample onto the retina and then let your eye, in combination with your brain, do the motion interpolation.

So how much does our brain interpolate, really?

Nobody knows for sure, but it's clear that there are plenty of areas where your brain helps to create the final image shown to your brain. Take this wicked blind spot test as example: Turns out there's a blind spot right where the optic nerve head on the retina is, a spot that's supposed to be black but gets filled in which interpolated interpolation by our brain.

Strange things happen when fps and Hz aren't in sync

As mentioned earlier, there are problems when the refresh rate and frame rate are not in sync; that is, when the refresh rate is not evenly divisible by the frame rate. What happens when your movie or app begins to draw a new frame to the screen, and the screen is in the middle of a refresh? It literally tears the frame apart:

Here’s what happens behind the scenes. Your CPU/GPU does some processing to compose a frame, then submits it to a buffer that must wait for a monitor to trigger a refresh through the driver stack. The monitor then reads the pending frame and starts to display it (you need double buffering here so that there is always one image being presented and one being composited). Tearing happens when the buffer that's currently being drawn by the monitor from top to bottom gets swapped by the graphics card with the next frame pending consumption. The result is that the top half of your screen is from frame A (the frame that is drawn too early before the refresh), while the bottom half is from frame B (the frame that was drawn before frame A).

That’s clearly not what we want. Fortunately, there is a solution! Vsync.

From Screen tearing to Vsync..

Vsync, short for vertical synchronization, can effectively eliminate screen tearing. It’s a feature of either hardware or software that ensures that tearing doesn’t happen – that your software can only draw a new frame when the previous refresh is complete. Vsync throttles the consume-vs.-display frequency of the above process so that the image being presented doesn’t change in the middle of the screen.

Thus, if the new frame isn’t ready to be drawn in the next screen refresh, the screen simply recycles the previous frame and draws it again. This, unfortunately, leads to the next problem.

From Vsync to Jitter..

Even though our frames are now at least not torn, the playback is still far from smooth. This time, the reason is an issue that is so problematic that every industry has been giving it new names: judder, jitter, stutter, jank, or hitching. Let’s settle on “jitter”.

Jitter happens when an animation is played at a different frame rate than the rate at which it was captured (or supposed to play). Often, this means Jitter happens when the playback rate is unsteady or variable, rather than fixed (as most content is recorded at fixed rates). Unfortunately, this is exactly what happens when trying to display, for example, 24 fps on a screen with 60 refreshes per second. Every once in a while, because 60 cannot be evenly divided by 24, a single frame must be presented twice (when not utilizing more advanced conversions), disrupting smooth effects such as camera pans.

In games and websites with lots of animation, this is even more apparent. Many can’t keep their animation at a constant, divisible frame rate. Instead, they have high variability due to reasons such as separate graphic layers running independently of each other, processing user input, and so on. This might shock you, but an animation that is capped at 30 fps looks much, much better than the same animation varying between 40 fps and 50 fps.

You don’t have to believe me; experience it with your own eyes. Here’s a powerful microstutter demo.

From Jitter to Telecine..

Telecine describes the process of converting motion picture film to video. Expensive professional converters such as those used by TV stations do this mostly through a process called motion vector steering that can create very convincing new fill frames, but two other methods are still common.

Speed up

When trying to convert from 24 fps to a PAL signal at 25 fps (e.g., TV or video in the UK), a common practice is to simply speed up the original video by 1/25th of a second. So if you’ve ever wondered why “Ghostbusters” in Europe is a couple of minutes shorter, that’s why. While this method often works surprisingly well for video, it’s terrible for audio. How bad can 1/25th of a second realistically be without an additional pitch change, you ask? Almost a half-tone bad.

Take this real example of a major fail. When Warner released the extended Blu-Ray collection of Lord of the Rings in Germany, they reused an already PAL-corrected sound master for the German dub, which was sped up by 1/25th, then pitched down to correct the change. But because Blu-Ray is 24 fps, they had to convert it back, so they slowed it down again. Of course, it’s a bad idea to do such a two-fold conversion anyway, as it is a lossy process, but even worse, when slowing it down again to match the Blu-Ray video, they forgot to change the pitch back, so every actor in the movie suddenly sounded super depressing, speaking a half-tone lower. Yes, this actually happened, and yes, there was fan outrage, lots of tears, lots of bad copies, and lots of money wasted on a large media recall.

The moral of the story: speed change is not a great idea.

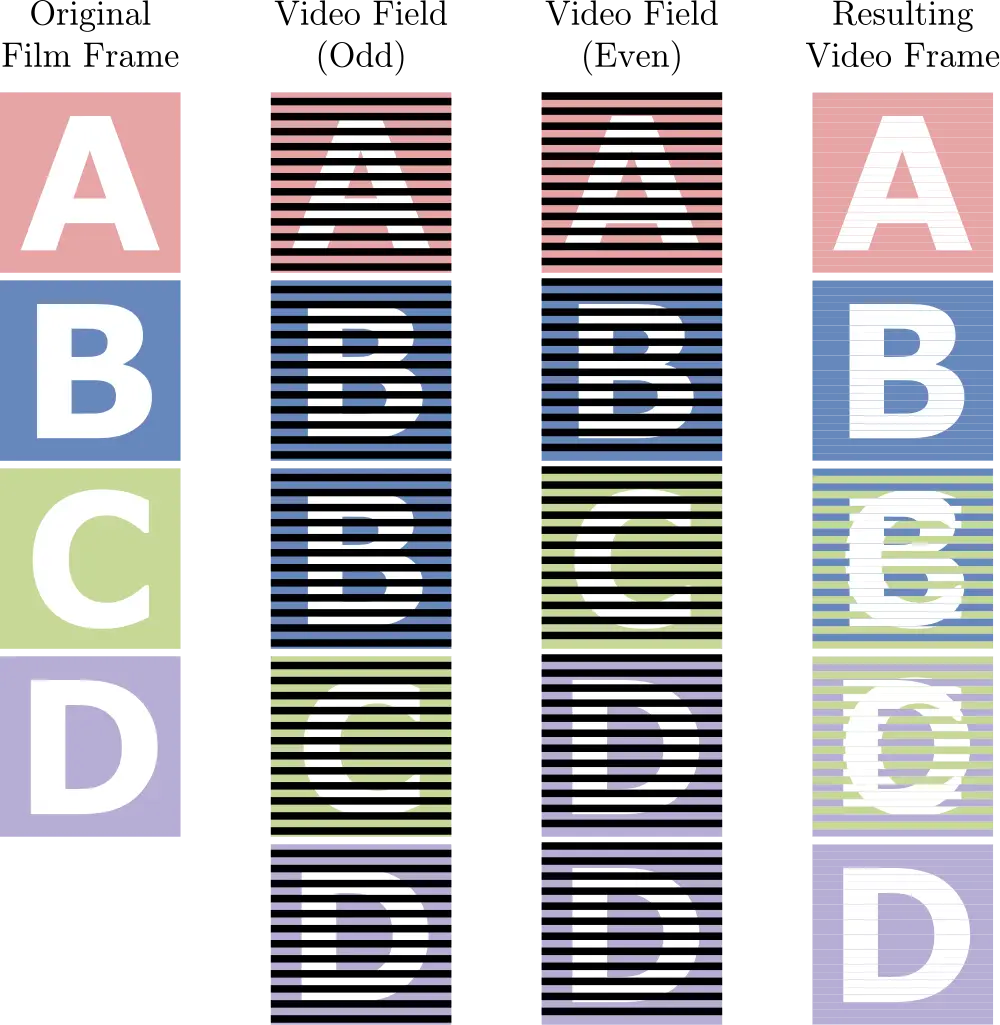

Pulldown

Converting movie material to NTSC, the US standard for television, isn’t as simple as speeding up the movie, because changing 24 fps to 29.97 fps would mean a 24.875% speed up. Unless you really love chipmunks, this may be not the best option.

Instead, a process called 3:2 pulldown was invented (among others), which became the most popular way of conversion. It’s the process of taking 4 original frames and converting them to 10 interlaced half-frames, or 5 full frames. Here’s a picture describing the process.

On an interlaced screen (i.e. a CRT), the video fields in the middle are shown in tandem, each of them interlaced, and so are made up of only every second row of pixels. The original frame, A, is split into two half frames that are both shown on screen. The next frame, B, is also split but the odd video field is shown twice, so it’s distributed across 3 half frames and, in total, we arrive at 10 distributed half frames for the 4 original full frames.

This works fairly well when portrayed on an interlaced screen (such as a CRT TV) at roughly 60 video fields (practically half frames, every odd or even row blank), as you never see two of these together at once. But it can look terrible on displays that don’t support half frames and must composite them together again into 30 full frames, as in the row at the far right of the picture. This is because every 3rd and 4th frame is stitched together from two different original frames, resulting in what I call a “Frankenframe”. This looks especially bad with fast motion, when the difference between the two original frames is significant.

So pulldown sounds nifty, but it isn’t a general solution either. Then what is? Is there really no holy grail? It turns out there is, and the solution is deceptively simple!

Welcome to the future: Modern TVs, G-Sync, Freesync

Much better than trying to work around a fixed refresh rate is, of course, a variable refresh rate that is always in sync with the frame rate, and that’s exactly what Nvidia’s G-Sync technology, AMD’s Freesync, and many modern TVs do.

G-Sync is a module built into monitors that allows them to synchronize to the output of the GPU instead of synchronizing the GPU to the monitor, while Freesync achieves the same without a module. It’s truly groundbreaking and eliminates the need for telecine, and it makes anything with a variable frame rate, such as games and web animations, look so much smoother.

Conclusion

You now know the good, bad and ugly of frame rates and how humans perceive motion. What you'll do with it is up to you.

Me personally, I came to the conclusion that really only 60 frames per second gives a decent balance that leads to a pleasant viewing experience with minimal motion blur and flickering, no jitter, great portrayal of motion and great compatibility with all displays without taxing the screen and GPUs too much.

If you make your own video content, the very best you can do is to record at 120 fps, so you can scale down to 60 fps, 30 fps and 24 fps when needed (sadly, to also support PAL’s 50 fps and 25 fps, you’ll need to drive it up to 600 fps), to get the best of all the worlds.

If this article influenced you in a positive way, I would love to hear from you.

To a silky smooth future!

Member discussion